Ways To Overcome The Obstacles Associated With SMS Messaging Load Testing At 10 Million Sends

Overview

Communication as an operation comes hand-in-hand with load and stress testing. Not long ago, I had the honor of executing an SMS use case of sending 10 million messages in SMS: performing sending it in mass. Obviously, every test was beyond the bare minimum. Dominant successes needed to be achieved on benchmarks like performance, scalability, and resilience. In this blog post, I will outline the obstacles faced during the high throughput SMS tests and explain the ways these challenges were met on a multidisciplinary planning, and tooling basis with coordination and foundational frameworks.

Challenge #1 Infrastructure bottlenecks

Challenge:

Messaging 10M Concurrent SMS Messages comes with great database requisites, throughput limits, computer requisites, as well as bandwidth. Certain blocks/environments like our SMS messaging test environment were – and to some degree still are: Not set for this framework, causing regular timeouts and failures in services.

Result:

Increased the scope of monitoring the instance as a result of working together with Devops to enforce Auto-scaling groups and Load balanced instances.

Challenge #2: Queue & Throughput Limitations

Problem:

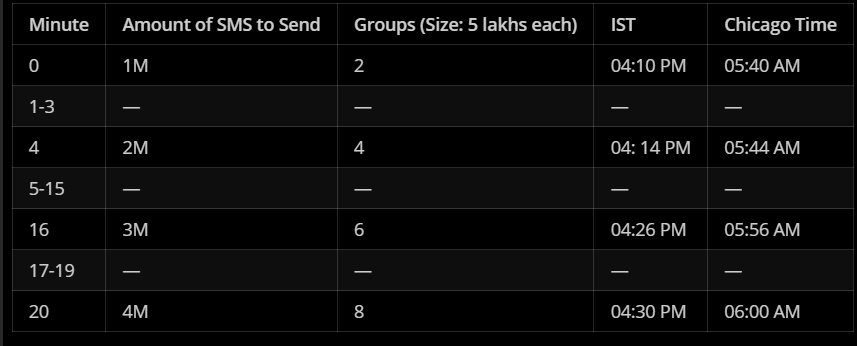

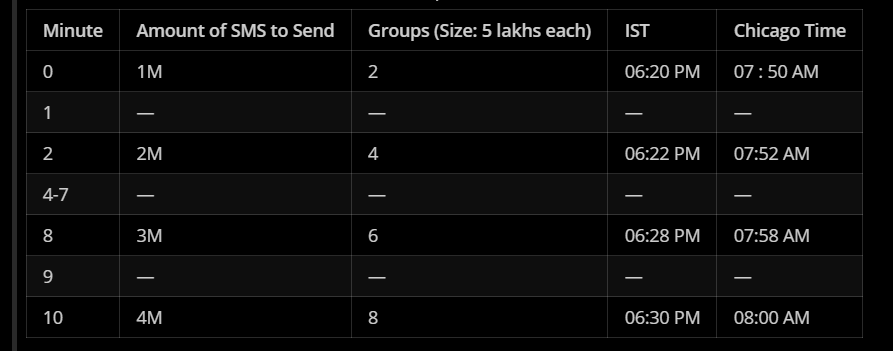

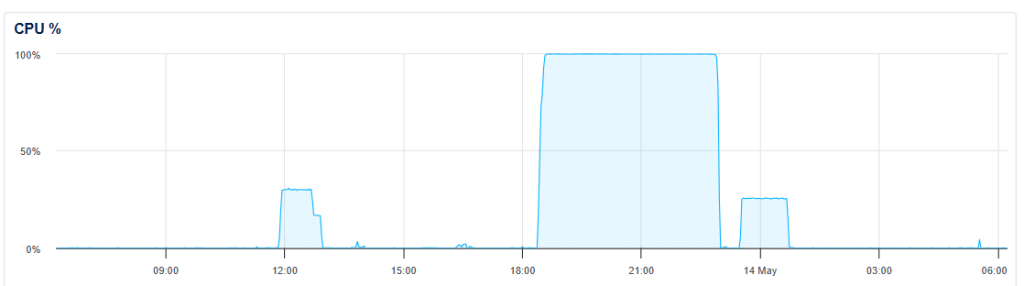

The messaging system depended on message queues (like RabbitMQ). Each of these queues reached their individual throughput ceilings which created performance lags in message processing upto 4:30 hours for 10 million SMS at the time interval of 10 to 30 minutes, and it reaches the CPU Usage of 100%.

Solution:

- Implemented batch message publishing for more efficient queue operations.

- Prefetch limits, acknowledgment methods, and consumer scaling were optimized within the queue configurations.

- Choke points were actively identified using Prometheus + Grafana alongside real-time monitoring to expedite the flow of systems under strain.

Challenge #3: Outbox Timeout at Scale

Problem:

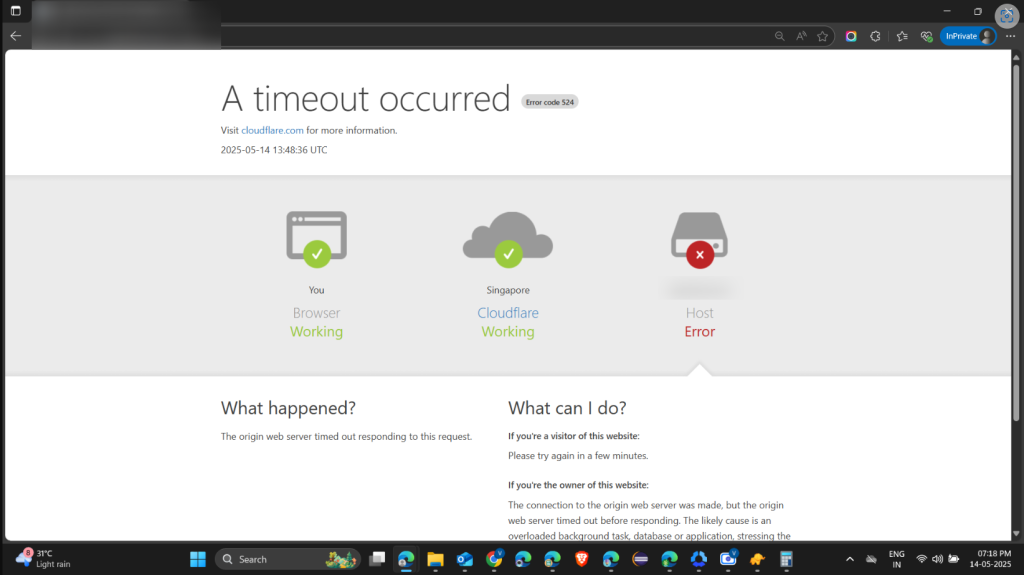

During the test, once I scaled up to 10 million contacts, the outbox service became increasingly unresponsive and started returning timeout errors. Messages encountered a bottleneck in the system and were unable to be transitioned to the next processing stage.

Solution:

- Developments of this swirling problem were attributed on-the-spot to the message dispatching layer alongside DevOps which designated their control sliders for the outbox servicing system.

- Set adjusted timeouts on other dependent services while also increasing overall box service throughput.

- The system metrics and logs were actively monitored post-tuning to ensure the desired velocity of flow was attained and thus validate the outcome.

Challenge #4: Test Data Management

Problem:

Duplicates were inefficiently managing and contending with the test data for 10 million unique SMS messages. Overlapping numbers or malformed payloads could skew results.

Solution:

- Used Python scripts to automate the creation of unique, valid phone numbers alongside distinct message templates.

- Before dispatch, monitored payload integrity with purpose built data validators.

Challenge #5: Performance Metrics Monitoring

Problem:

Collecting meaningful parameters and metrics (latency, delivery rate, failure rate) with such a massive volume was disorderly at first.

Solution:

- Applied APM integration for backend and message path tracing with New Relic, DataDog and other similar tools.

- Set the following key SLAs: end-to-end latency, SMS delivery success rate, and retry rate.

- With custom dashboards, filtered non-relevant metrics and visualized them in near real time.

Challenge #6: Failure Recovery and Retry Mechanisms

Problem:

The primary reason was a great deal of temporary network malfunctions and external SMS gateway problems.

Solution:

- Coordinated with developers on creating simulated chaos testing failures on the gateway.

- Validated attempts at cancellation logic verification and exponential risk inflation gradual increase algorithms.

- Confirmed configuration completion on message queues that cannot be recovered sent to the dead letter queue.

Challenge #7: Cross-Team Collaboration

Problem:

The scope of such a test was large scale, capturing the synergy between multiple participants at QA, DevOps, Backend.

Solution:

- Developed war rooms and live dashboards for the duration of the testing window.

- Designed ACTs for resolution tracking, escalation, and rapid issue resolution.

- Compiled logs of timestamped root causes for all issues captured for post-mortem review.

- Verified fundamental initial pre-test strategy derived observations.

- Planning determines everything – environment readiness, data strategy, and coordination need to be locked before the test.

Conclusion

Quality Assurance (QA) has so far remained a one shot deal with me for testing 10 million SMS messages, honing and blending all the skills together in single unit tests. It never failed to challenge me; rather, it was deeply gratifying. This taught us a myriad of lessons about system scalability, fault tolerance, and teamwork. In my experience, these multifaceted challenges remind me how critical QA is in a business and its sophisticated technologies. It also reminds us about the importance of performance surpassing targets regarding said objectives. As gatekeepers of robust systems, we uncover shortcomings through agile drills.